RSS feeds parser and core models

Parser

There are so many RSS parser out there. I’ve tried lots of them, including JavaScript, Elixir and Rust versions. So far the most reliable one I’ve tested is the Rust version from rust-syndication. There is even a Elixir fast_rss wrapped on top of the rss.

The wrapper is pretty lightweight rustler makes the whole interop with Rust with Elixir so much easier and straightforward.

I could’ve just use fast_rss, but I also want to parse Atom feeds as well. So I ended up took the approach of fast_rss and build another wrapper around atom feed parser on top of Rust’s atom.

This is what the Rust code wrapper looks like.

Core models

Once we have the RSS and Atom feed parser, along with JSON feed. We would ended up with a map data structure of the feeds. Since there are so many stuff in the RSS feeds, all I want for now is just part (title, date, content, author) of that. So I would like to be working from the backwards. Start from what the UI might looks like, then define the core models to support this UI.

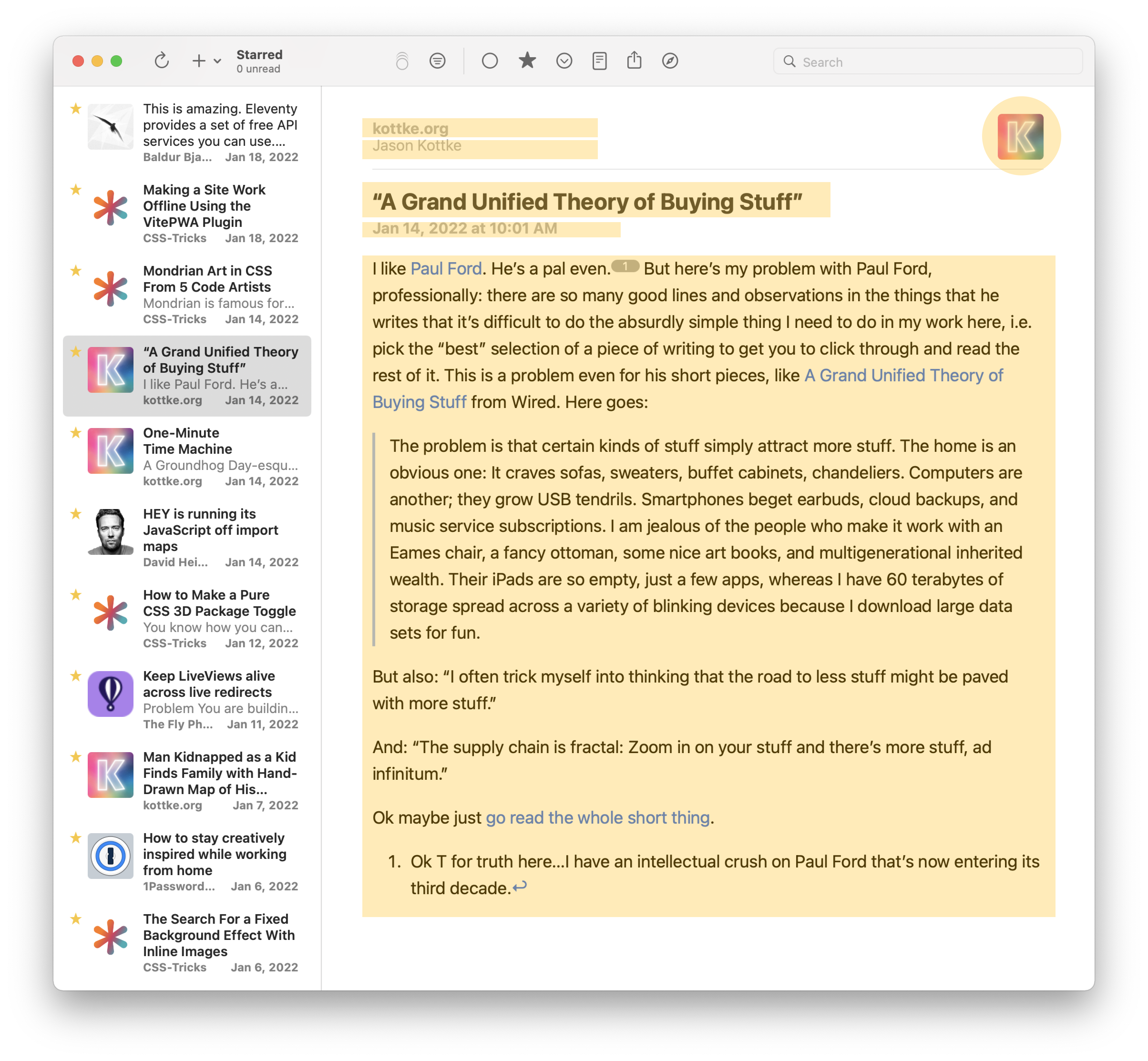

I took a screenshot of an awesome app called NetNewsWire (You should try it if you haven’t).

By looking at the UI, here you can find the minimal information we would need to extract from the RSS feed.

defmodule Item do

defstruct id: nil, title: nil, date: nil, content: nil, link: nil, authors: []

end

defmodule Feed do

defstruct link: nil, title: nil, favicon: nil, items: []

end

These two models by no means are the final version, but it’s a good start for us to start to create our minimal RSS reader UI. Once we have the model in mind, the fun part of leveraging the power of Elixir begins.

Pattern matching to extract

Okay, so now we have both the core models and the parsed RSS/Atom feeds in Elixir’s map format. Time to normalize/extract the key contents into our defined core models above. I think that’s where the Elixir’s pattern matching really shines.

For example, we would like to extract the content of the feed item. For different rss versions, the place they put the content will be different. Here are a couple of examples and its Elixir extraction function:

The entire content field extracting including error case handling (nothing can be found) can boils down to this:

def get_item_content(%{

"content" => %{

"value" => value

}

})

when is_binary(value),

do: value

def get_item_content(%{"content" => content}) when is_binary(content),

do: content

def get_item_content(%{

"description" => %{

"value" => value

}

})

when is_binary(value),

do: value

def get_item_content(%{"description" => description}) when is_binary(description),

do: description

def get_item_content(%{"content_html" => content_html}), do: content_html

def get_item_content(_), do: "No Content"

Note:

is_binaryhere is basically trying to check if the given value is a string. You can read more here at Elixir’s doc: https://elixir-lang.org/getting-started/binaries-strings-and-char-lists.html

I don’t know about you. But I found extremely satisfying writing pattern matching comparing to nested if/else or guard statement. I think mostly because how human’s brain works, we are exceptionally good at pattern matching. Instead of one step at a time checking x then y, we describe what the stuff we want out of the given shape of data.

Here you can find the all the data extraction related code. Please notice this project is still being actively developed.

Now we actually have a minimum working version of given an feed url, parsing and extracting them into a the desired data model we want. Next, we will see how can leverage the part we already have so far to make something useful.